How to measure an AI models performance – F1 score explained

Organizations often ask us, “How well is the AI model is doing?” Or “How do I measure its performance?”, we often respond with “Performance of the AI model is based on what the F1 score of the model is” and we will get a puzzled look on everyones face or asking “what is an F1 score?” So here I am going to attempt to explain F1 score in an easily understandable way:

Definition of F1 score:

F1 score (also F-score or F-measure) is a measure of a test’s accuracy. It considers both the precision (p) and the recall (r) of the test to compute the score (as per wikipedia)

Accuracy is how most people tend to think about it when it comes to measuring performance (Ex: How accurate is the model predicting etc.?). But accuracy is not a true measure of AI models performance. Accuracy only measures the number of correctly predicted values among the total predicted value. Although it is a good measure of performance it is not complete and does not work when the cost of false negatives is high. Ex: Lets assume we are using an AI model to predict cancer cells, after training, the model is fed with 100 samples that have cancer and the model identifies 90 samples as having cancer. That 90% accuracy, which sounds pretty high. But the cost of not identifying 10 samples is very costly. Therefore accuracy is not always the best measure.

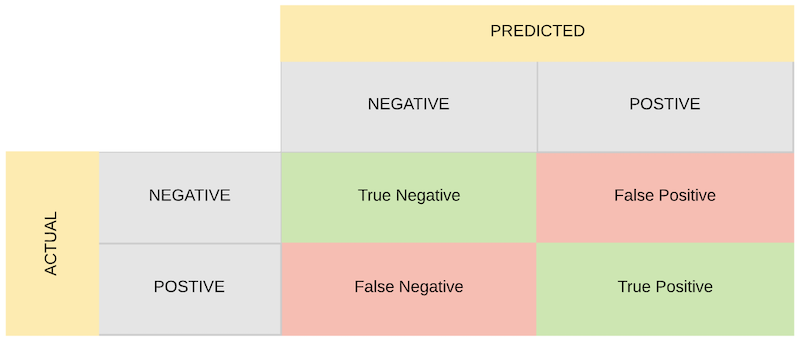

So to explain it further lets consider this table:

True Positive:

True Positive is an outcome where the model correctly predicts the positive class. Ex: when cancer is present and the model predicts cancer.

False Positive is an outcome where the model incorrectly predicts the positive class. Ex: when cancer is not present and the model predicts cancer.

False Negative is an outcome where the model incorrectly predicts the negative class. Ex: when cancer is present and the model predicts no cancer.

True Negative is an outcome where the model correctly predicts the negative class. Ex: when cancer is not present and the model predicts no cancer.

As explained by the definition, the F1 score is a combination of Precision and Recall.

Precision is the number of True Positives divided by the number of True Positives and False Positives. Precision can be thought of as a measure of exactness. Therefore, low precision will indicate a large number of False Positives.

Recall is the number of True Positives divided by the number of True Positives and the number of False Negatives. Recall can be thought of as a measure of completeness. Therefore, low recall indicates a large number of False Negatives.

Now, F1 score is the harmonic mean of Precision and Recall and gives a much better measure of the model.

F1 Score = 2*((precision*recall)/(precision+recall)).

A good F1 score means that you have low false positives and low false negatives. Accuracy is used when the True Positives and True negatives are more important while F1-score is used when the False Negatives and False Positives are crucial

Interested in more AI insights. Click here and read our other articles.