Leveraging RAG to search Technical Manuals

In this article, we present our approach to leveraging Retrieval-Augmented Generation (RAG) to develop a robust and efficient solution for searching extensive collections of technical manuals used by engineers. This system addresses a variety of practical needs, including:

Locating Detailed Procedures: Engineers who are familiar with a maintenance procedure may require precise step-by-step instructions from a specific manual.

General Queries: Users may have free-form questions regarding installation, operation, maintenance procedures, or parts lists, necessitating accurate and relevant references from the manuals.

While Retrieval-Augmented Generation (RAG) can significantly enhance efficiency and accuracy across various applications, its implementation in the context of technical manuals introduces distinct challenges. This article explores general RAG strategies, highlights key considerations specific to technical manuals, and presents practical solutions to address these challenges effectively.

General RAG Strategies:

A typical RAG-based search solution will follow the below steps and strategies:

Document Chunking: Breaking documents into smaller, manageable units (commonly referred to as “chunks” or “segments”) is a foundational practice in RAG. Instead of working with entire documents, which can span thousands of tokens, dividing them into smaller parts enhances retrieval and processing efficiency. Common approaches include:

Fixed-Length Token or Word Splits: For transformer-based models, documents are often divided into chunks of 200–500 tokens, with optional overlaps (e.g., a 50-token overlap) to preserve context across boundaries. Research by Lewis et al. (2020) in “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks” highlights that segmenting documents into smaller passages can significantly improve retrieval accuracy.

Semantic or Paragraph-Based Splits: Many RAG systems utilize natural paragraph or section breaks to create semantically coherent chunks, ensuring better contextual integrity within each segment.

Hybrid Approach: A combination of methods can be employed, such as initially splitting based on headings or a table of contents and then further dividing longer sections that exceed a token limit.

Two-Stage RAG Pipeline Search:

A typical RAG workflow involves a two-stage process:

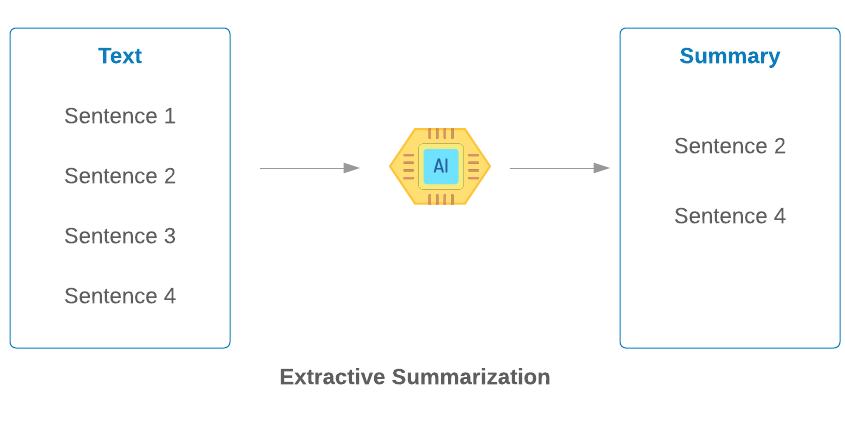

First-Stage Retrieval: Conduct a broad search using methods like vector similarity or keyword-based approaches to identify the top N relevant chunks.

Second-Stage Analysis: Utilize a more advanced language model or a task-specific model to analyze the top N chunks in greater depth. This stage often involves summarizing or extracting the most accurate and relevant answer for the given query.

By leveraging these strategies, RAG systems can efficiently handle large and complex documents, ensuring high retrieval accuracy and meaningful responses. While the general Retrieval-Augmented Generation (RAG) approach is highly effective across a range of applications, it encounters significant challenges when applied to searching large technical manuals.

Key Challenges with Technical Manuals and Other Scientific Documents

Complex Document Structure: Technical manuals are often organized with intricate hierarchies, including sections, subsections, tables, and appendices. Standard RAG methods may struggle to capture the semantic relationships across these structured components while preserving document structure, leading to incomplete or inaccurate retrievals. In many instances, critical information—such as precautions—may be located far from its logical position within the document hierarchy. When splitting documents into smaller segments, as commonly recommended in RAG workflows, this structural disconnect poses a risk of losing vital context. Conversely, retaining documents in larger chunks can introduce significant noise and irrelevant information, making it more challenging to precisely identify and retrieve the desired content. Balancing these trade-offs to preserve the underlying structure and accurately associate segments with their logical context remains a key challenge in optimizing RAG for technical manuals.

Additionally, top-level sections in technical manuals often provide high-level summaries or schedules, such as service intervals (e.g., “Overhaul every 45,000 hours”), while the detailed procedures are found in subordinate sections. A simplistic retrieval approach might match a user query (e.g., “45K-hour overhaul”) to the high-level summary, inadvertently missing the specific, actionable instructions located deeper in the document.

Moreover, in extensive manuals, specific procedures—such as oil changes—are frequently repeated in multiple sections, each tailored to a different component. Without careful attention to contextual markers like section identifiers or page numbers, an RAG system risks retrieving instructions intended for an incorrect component, leading to confusion and potential errors. Addressing these issues requires sophisticated strategies to preserve and utilize contextual and structural information effectively.

Many queries require referencing multiple sections or combining information across disparate parts of a manual. General RAG implementations may fail to effectively retrieve and synthesize information spread over multiple chunks

Difficulty in Determining Optimal Chunk Size: Determining the appropriate size for each document chunk is a complex task. If the chunks are too small, critical context can be lost—for example, the steps of a single procedure may become fragmented across multiple chunks, diminishing coherence. Conversely, if the chunks are too large, they may include extraneous or unrelated content, introducing noise into the retrieval process. Striking the right balance is crucial to ensure that retrieval results are both relevant and contextually complete, enabling accurate and meaningful responses

Domain-Specific Terminology: Technical manuals frequently use specialized language and jargon that may not align with the general-purpose embeddings used in RAG models. This mismatch can result in poor understanding of queries and suboptimal retrieval of relevant information.

Additionally, user queries for technical manuals often involve vague or incomplete descriptions, relying on the system’s ability to infer intent and context. General RAG systems may struggle to interpret such queries accurately in this specialized domain.

Addressing these challenges requires a tailored approach to adapting RAG for the specific complexities of technical manuals, including enhanced chunking strategies, domain-specific embeddings, and multi-pass retrieval techniques to improve accuracy and relevance.

Our Proposed Solution:

Leverage existing document structure

Most technical manuals are structured with well-defined organizational features, such as a Table of Contents (TOC), section headings, or numeric and typographic markers (e.g., font size, bold text). When a TOC is available, we utilize it to segment the document and build metadata for each section or segment. This approach ensures that the document’s original hierarchy is preserved.

In cases where a TOC is absent, we construct a logical outline based on section numbering and stylistic cues. This process maintains the integrity of the manual’s structure, ensuring, for example, that a sub-section titled “8.2 Maintenance Action X” is correctly nested under the broader “8 Maintenance” category, which in turn is part of the overarching “Equipment Component XYZ” section. By preserving the manual’s hierarchical organization, we enhance the system’s ability to retrieve relevant and contextually accurate information.

Evaluating and Optimizing Chunk Size

We investigated several common approaches to determining chunk size, including fixed-length splits, paragraph-based splits, sliding windows, and hybrid methods. While research, such as Lewis et al. (2020), suggests that smaller passages often improve retrieval accuracy in many scenarios, our experience with technical manuals revealed unique challenges. When segments are too small, they risk fragmenting critical context. For example, precautions, related sub-steps, or references to other sections may become separated from the main procedure, leading to incomplete or ambiguous retrieval results. Conversely, larger segments may include extraneous or irrelevant information, introducing noise into the search process. This can diminish the efficiency of retrieval and the ranking quality of results, as irrelevant content can dilute the focus on the user’s specific query.

To address these challenges, we adopted a balanced approach that prioritizes preserving the structure and logical flow of the manual while minimizing noise. Instead of adhering rigidly to a predefined chunk size, our segmentation ranges from a few hundred to a few thousand tokens, depending on the document’s structure and content. This approach ensures that segments are both contextually coherent and efficient for retrieval, aligning with the unique demands of technical manual searches.

Enhancing Document Indexing with Rich Metadata

Effective segmentation of technical manuals goes beyond simply splitting the content. To improve retrieval accuracy and user experience, we embed comprehensive metadata into the index for each section or segment. These details provide contextual insights and enable precise and relevant search results. Key metadata components include:

Hierarchy Structure: Captures how each sub-section fits into the broader document organization. For instance, “8.2 Maintenance Action X” is indexed as part of “8 Maintenance,” which in turn belongs to a higher-level section such as “Equipment Component XYZ.” This ensures the system respects the original hierarchy and logical flow of the manual.

Topic Tags: Identifies the specific equipment, component, or topic covered in the segment, such as “Pump A” or “Motor B.” Tags the content type (e.g., maintenance, installation, specifications) to help refine search results and align with user intent.

Summaries: Provides brief overviews of both the parent section and the individual segment. These summaries compensate for the loss of broader context that may occur when a section is extracted from a lengthy manual, helping users quickly understand its relevance.

PDF Page Number or Precise Location: Embeds the exact page number (or equivalent location marker) for each chunk. This ensures traceability and allows users to cross-reference and verify the retrieved information directly within the original manual.

Section Numbering: Includes detailed numbering (e.g., “2.3.24”) to reflect the document’s internal organization, facilitating easy navigation and accurate referencing.

Heading Titles: Incorporates section headings (e.g., “Maintenance Steps for Pump A”) to give users immediate clarity about the content of the segment.

Special Tags for Filtering:Adds tags such as Is_TOC, Is_Table, or Is_Bad_Section to classify and filter segments. For instance:

Is_TOC: Identifies the Table of Contents.

Is_Table: Marks sections containing tabular data.

Is_Bad_Section: Flags sections with incomplete or irrelevant information.

By embedding these rich metadata elements, the system ensures more accurate retrieval, preserves contextual integrity, and enables users to efficiently navigate even the most complex technical manuals.

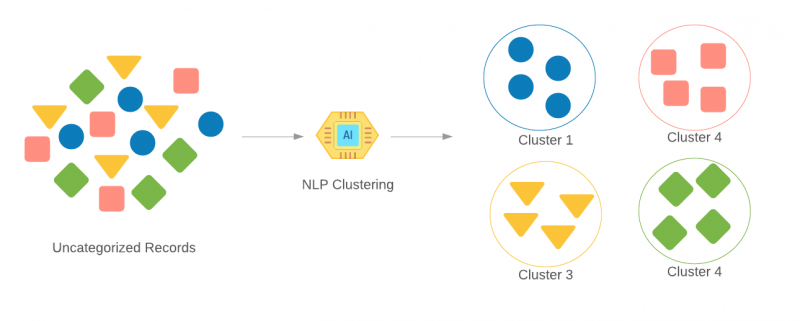

Modular Indexing for Context-Specific Searches

Instead of relying on a single universal index for Retrieval-Augmented Generation (RAG)-based similarity searches, we employ a modular indexing approach tailored to specific objectives. This strategy enhances accuracy and relevance by pre-processing the text to create specialized indexes aligned with distinct content categories and user needs.

Tailored Indexes for Specific Goals: For instance, when maintenance actions are parsed, we parse the manual to extract action-oriented language, focusing on verbs (e.g., “clean,” “replace,” “remove”) and their associated objects. This ensures that the index is optimized for retrieving detailed maintenance actions. Another index focuses on identifying and organizing content related to installation procedures, capturing the step-by-step instructions and key requirements. For queries involving parts and other components, we create an index emphasizing part references, usage instructions, and technical specifications.

Dynamic Query Routing: When a user submits a query, we analyze its intent and context to determine which specialized index to query. For instance, a query related to maintenance procedures is routed to the maintenance-oriented index.

Integrating Results with Metadata: Results retrieved from the specialized index are enriched with additional metadata, such as hierarchy structure, topic tags, section titles, and PDF page numbers. This integration ensures that the retrieved information is both relevant and contextually precise.

Ensuring Context-Specific Accuracy: By querying only the subset of data most relevant to a user’s query, we minimize noise and irrelevant results. For example, a search for “45K-hour overhaul procedures” will prioritize content from the maintenance index while still leveraging metadata to provide the broader context of the manual.

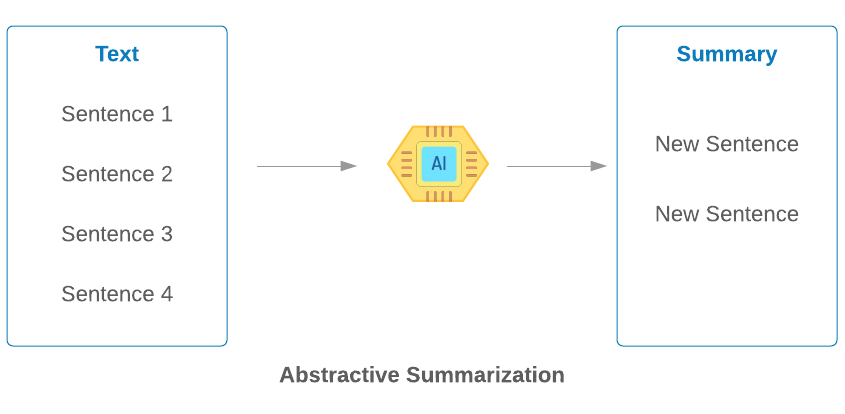

Enhancing Accuracy with LLM Re-Ranking Instead of Synthesis

In many RAG workflows, the system employs a Large Language Model (LLM) to synthesize a single response by summarizing the top N retrieved chunks from a vector search. However, in our approach, we take a different path. Rather than generating a synthesized answer, we use the LLM strictly for re-ranking, enabling us to point users directly to the single most relevant PDF page reference. This method prioritizes maximum fidelity to the original manual content while avoiding potential inaccuracies introduced by intermediate summaries.

Conclusion:

By integrating balanced chunk sizes, structured metadata, and specialized indexes, our approach provides a flexible yet precise solution for retrieving information from technical manuals. Unlike traditional RAG systems that synthesize answers through a large language model (LLM), our system emphasizes fidelity by directing users to the exact page number in the original manual. This ensures engineers can access complete and unaltered details, including images, tables, and precise wording essential for accurate decision-making.

Additionally, leveraging each document’s inherent organization—such as tables of contents, hierarchy markers, and section numbering—preserves context and significantly reduces noise in search results. This approach ensures that engineers receive clear, accurate, and actionable information tailored to their specific needs, maintaining the integrity and reliability of the source material