Deploy AI Models as Microservice

Microservice is a software development technique for developing an application as a suite of small, independently deployable services built around specific business capabilities. Microservices is the idea of breaking down big, monolithic application into a collection of smaller, independent applications.

Why should machine learning models be deployed as microservices?

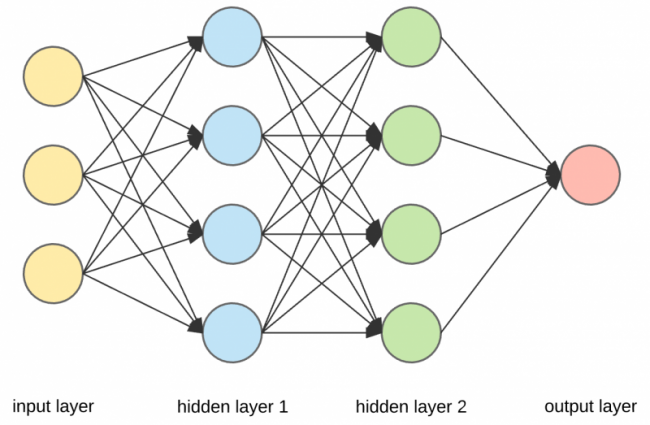

This is an empirical era for machine learning as successful as deep learning has been, our level of understanding of why it works so well is still lacking. Machine learning engineers need to explore and experiment with different models before they settle on a model that works for their specific use case. Once a model is developed there are inherent advantages to deploying machine learning models in a container and serving it as microservices.

Here are a few reasons to why it makes sense to deploy AI models as microservices:

- Microservices are smaller and are easier to understand as opposed to large monolithic application. Microservices are focused around business functions and so it makes it simpler to deploy a single specific function without worrying about all the other business functions.

- Each service can be deployed independently of each other. This also allows for independent scaling of each service as opposed to the entire application. This is a much efficient way of using computing capabilities and will achieve a balance of computing resource allocation. Microservices deployed in a container architecture allows for further efficiency in scaling.

- Because each service is focused on a specific business function, it makes it easier for development resource(s) to understand a small set of function rather than the entire application.

- Making the model as a service provides the ability to expose the services to both internal and external applications without having to move the code. The ability to access data using well-defined interfaces. Containers have mechanisms built in for external and distributed data access, so you can leverage common data-oriented interfaces that support many data models.

- Each team also has the luxury of choosing whatever languages and tools they want for their job without affecting anyone else. It eliminates vendor or technology lock-in. By deploying Machine Learning models as Microservices with API endpoints, the data scientists and AI programmers can write models in whatever framework- Tensorflow, PyTorch or Keras, without worrying about the technology stack compatibility.

- Microservices allow for deployment of new versions in parallel and independent of other services. Developers can work in parallel and get changes to production independently and faster. Enables the continuous delivery and deployment of large, complex Machine Learning applications. With production ready frameworks like Tensorflow Serving, the management of versions of a model become very easy.

- Deploy to any environment local, private or public cloud. If there are data privacy concerns on deploying AI models on the cloud, creating individual models as containers allow for deployment of AI models in the local environment.

- In most AI projects, there will be several AI models that will be developed to do specific functions (ex: A model to do Named Entity Recognition, Model to do Information Extraction etc.). Microservices allows for these models to be independently developed, updated and deployed.

Now let’s talk about some technologies that help with deploying models as microservices. Here we want to focus on two prominent technologies that allow for this to happen.

Docker:

Docker helps you create and deploy microservices within containers. It’s an open source collection of tools that help you build and run any app, anywhere. Here is a great resource on Docker Basics. There are plenty of resources out on the internet for getting started with Docker as well.

Kubernetes:

When it comes to deploying microservices as containers, another aspect that should be kept in mind is the management of individual containers. If you want to run multiple containers across multiple machines – which you’ll need to do if you’re using microservices, you will need to manage these efficiently. To start the right containers at the right time, make them talk to each other, handle storage and memory considerations, and deal with failed containers or hardware. Doing all of this manually would be a nightmare and hence having a tool like Kubernetes is critical. Kubernetes is an open source container orchestration platform, allowing large numbers of containers to work together in harmony, reducing operational burden.

When used together, both Docker and Kubernetes are great tools for developing a modern AI cloud architecture.