Contributing Author: Wei-Fan Chiang, AI Engineer @ Abeyon.

In machine learning and natural language processing, topic modeling is a type of statistical model for discovering the abstract “topics” that occur in a collection of documents. Topic modeling is a frequently used text-mining tool for the discovery of hidden semantic structures in a text body.

In this fast-paced information explosion era, our daily lives are affected by circumstances around the entire globe, all of which are worth some degree of attention. Unfortunately, we are too limited to learn every single one and we rely on trends: the most concerned and the most discussed topics.

The common Topic Modeling approach:

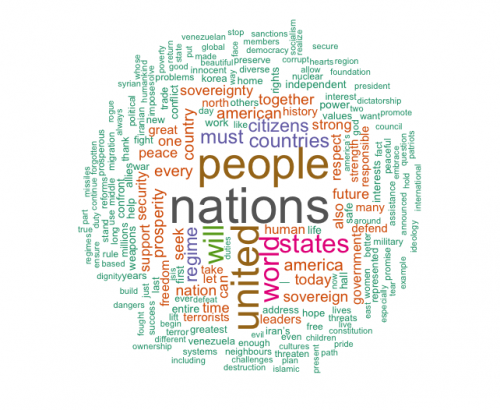

One commonly used approach to learning the trends is the use of unsupervised clustering. Unsupervised clustering involves a collection of documents from various online sources such as news articles, social media data, etc., and grouping these data together. Once groups are established, a human annotation is then required to manually create word clouds as summaries to represent the groups.

Why is it not enough?

For many applications, a simple algorithm such as LDA should be perfectly sufficient. However, at Abeyon, we choose not to settle for word clouds because they are like riddles. They are composed of individual words, which require manual human interpretation to guess what stories the words try to convey.

We don’t want riddles or word clouds. Instead, we aim to produce full human-readable sentences that explain trends and provide an initial interpretation of topic modeling results. The biggest challenge we face in achieving this ideal is “how to summarize from a group of documents that were written by different authors using different tones and different phrases?”. This challenge is the same problem that text summarization tries to solve (see our previous post Bring clarity to unstructured data using Natural Language Processing (NLP) – Part 2 (abeyon.com)) albeit within a single document.

Step 1: Group documents with words

To discover the ongoing trends of the world, our topic modeling solution first does a coarse-grained grouping. From a humongous collection of documents, we use text classification to extract instances belonging to each category (such as economics, society, etc.) of interest. Within an individual category of documents, we utilize a traditional topic modeling algorithm like LDA (blei03a.dvi (jmlr.org)) to further split the category into groups.

Step 2: Let Documents speak

From a group of documents, we look for few conclusive sentences that explain the trend behind the group. This is beyond the capability of traditional topic modeling algorithms. Our idea is to let each document speak out what it thinks the trend is. Thanks to the semantic role labeling technique (Semantic role labeling – Wikipedia), we can extract sentences in a “who-does-what” (or “who-did-what”) format from the document.

Step 2: Let Documents vote

From a bunch of “who-does-what”, we let the documents vote for the most conclusive sentences. With the zero-shot learning technique (Zero-shot learning – Wikipedia), we can achieve this by calculating a similarity score (e.g., cosine similarity Cosine similarity – Wikipedia) of each “who-does-what” sentence against the documents. In this sense, the sentence that receives the highest score is the one that receives the most favors from the documents. As such, that sentence is the best representation of the documents in that category, and thus for explaining the overarching trend of the group.

We have built our topic modeling solution on the shoulder of traditional topic modeling algorithms, using semantic role labeling and zero-shot learning. This technology allows us to learn global trends not from obscure word clouds and riddles but directly from proper sentences in an oral language.

Want to learn more about AI concepts? Click here to see our Insights series