Create Specific Task LLMs – Part 1 – Prompt Engineering to Fine Tuning

Introduction

In the world of artificial intelligence (AI) and machine learning, Large Language Models (LLMs) like GPT-3 have fundamentally transformed our approach to handling vast quantities of data and knowledge. However, when working on domain specific AI tasks such as Classification or Named Entity Recognition (NER), the need for specialized LLMs becomes apparent. Here we delve into why specific-task LLMs are essential, how to commence with prompt engineering, and the crucial transition to fine-tuning for optimal performance to get specific task LLM. Abeyon’s AI team has invested significant resources into researching these topics, yielding profound insight and conclusions.

Why Specific-Task LLMs Are Necessary

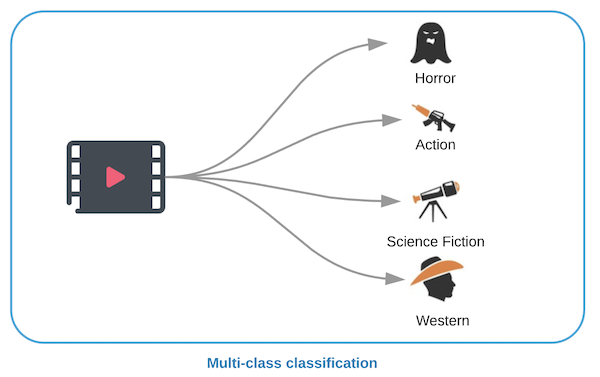

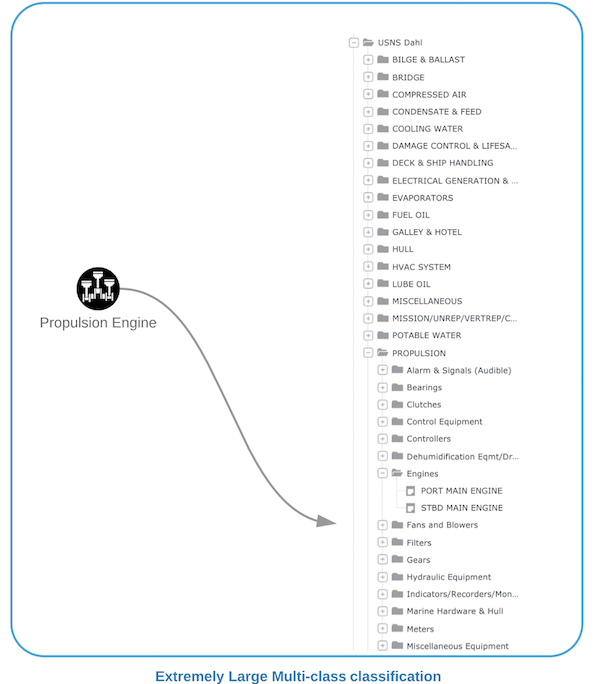

General-purpose LLMs, while impressive in their breadth, often lack the precision required for specific tasks. In scenarios like NER or classification, where high accuracy is of paramount importance, specific-task LLMs becomes crucial. This consideration is especially vital to Abeyon’s projects where precise and insightful statistics are a desired output of the client. The design of these specific-task LLMs focuses on a few key factors:

- High Accuracy Over Creativity: The primary goal of these models is accuracy. In specific-task applications, the precision of information is more critical than the model’s creative output, while it is important for generic LLM to have creative output.

- Consistency: Consistent answers to the same and/or similar queries are vital, ensuring reliability and trust in the model’s outputs.

- Response time: Optimizing response time is another critical goal for specific-task LLMs. In many real-world applications, such as customer service or real-time data analysis, the speed of the response can be as important as its accuracy. By streamlining the model to focus on specific tasks, the computational load is reduced, leading to faster response times. This is particularly beneficial in high-volume, time-sensitive environments like financial markets analysis or on-the-fly translations where quick and accurate responses are essential.

- Focus on Specific Domain Knowledge: By concentrating on domain specific text, these LLMs can provide responses that adhere to the logic and terminology of the target domain. This is especially important in domains requiring technical or specialized language, like marine engineering, or contexts sensitive to content, such as materials intended for children. Organizations that were required to manage large quantities of complex internal documents have found incredible utility in this feature, as these LLMs were able to adapt to the unique nuances of how their documents were structured and the vernacular with which they were written.

Starting with Prompt Engineering

The journey towards customizing Large Language Models (LLMs) for specific tasks begins with prompt engineering. This critical step requires a delicate balance: prompts must be clear and detailed to guide the LLM effectively, yet not so verbose or complex that they obfuscate the intended task. The essence of prompt engineering lies in its ability to succinctly communicate the task requirements and desired logic flow to the model. Effective prompt engineering is not just about instructing the LLM; it’s about setting the stage for successful fine-tuning. By crafting prompts that are comprehensive yet straightforward, we can identify the model’s current limitations and areas where fine-tuning is necessary. These prompts act as preliminary tests, revealing how well the LLM grasps specific concepts and logic, and where it may deviate or struggle.

The diversity in prompt construction is vital. From providing step-by-step Chain of Thought (CoT) guides to incorporating explicit definitions, each type of prompt serves a purpose. They are not just commands but tools to map out the model’s learning path. By using these prompts, we can pinpoint where the model’s understanding is lacking, thus highlighting the potential training points for fine-tuning. Through this process, Abeyon has been able to discover qualities in our clients’ data that they were previously unaware of and develop a deep understanding of the unique dynamics present in those projects. This is especially helpful in instances where the logic behind certain processes becomes necessarily complex, thus requiring the solution to be incredibly robust and capable of managing considerably high degrees of variation within the client’s data.

In essence, prompt engineering is the art of balancing clarity with brevity. It’s about designing prompts that are sufficiently informative to guide the LLM toward the desired reasoning path, yet simple enough to prevent unnecessary complications. This careful crafting of prompts sets the foundation for the fine-tuning process, ensuring that the LLM not only understands the task at hand but also follows the correct logic to arrive at accurate conclusions.

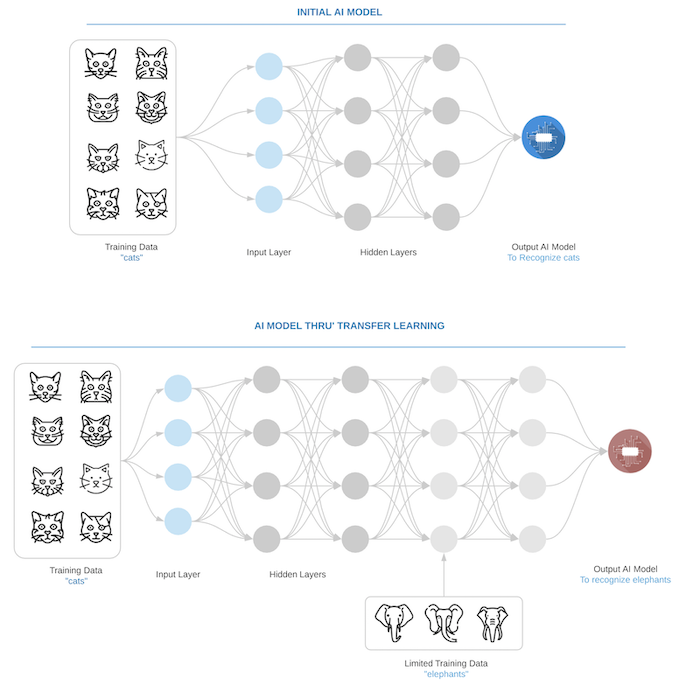

Recognizing the Limitations of Prompts and Transitioning to Fine-Tuning

It’s important to clarify a common misconception regarding fine-tuning. While some references might suggest that fine-tuning is primarily about adjusting the output format, its role is far more extensive. Fine-tuning can be employed to train the LLM to follow a specific logic path, particularly when the original model doesn’t adhere to sound reasoning. Additionally, fine-tuning helps the model understand the material more accurately through Retrieval-Augmented Generation (RAG) or other instructions. This feature of fine-tuning helped Abeyon meet client needs in a project where our LLM was required to incorporate special, organization-specific definitions of a particular set of terms that would often differ from the typical colloquial meanings these words usually held in other, more ordinary contexts. As conflicts between the respective definitions of these terms were identified, our developers faced a unique challenge in which the LLM had to essentially unlearn the traditional syntactic conventions of the English language upon which the technology was initially built, to adapt to the specific needs and context of our client. This example illustrates a scenario in which the LLM would make incorrect connections or conclusions when referring to its pre-programmed retrieved material, thus demonstrating a clear need for further training.

Another significant benefit of fine-tuning is the improvement it brings in both accuracy and response time. By refining the model’s understanding and processing capabilities, fine-tuning ensures that the LLM not only provides more accurate responses but also does so more swiftly. This enhancement is particularly vital in applications where timely and precise information delivery is critical.

After recognizing the limitations of prompt engineering and transitioning to fine-tuning, the next critical step involves a targeted approach to each identified training point. This process is meticulous and requires a strategic approach to ensure the Large Language Model (LLM) is accurately fine-tuned for the specific tasks at hand.

- Tuning on Each Identified Training Point: The fine-tuning process should systematically address each training point identified during the prompt testing phase. This means that every instance where the LLM’s response was not accurate, consistent, or logical needs to be revisited. Fine-tuning at this stage is not a blanket approach; it’s about pinpointing specific areas of improvement and addressing them individually.

- Creating Training Data: For each of these identified points, there may be a need to modify existing response text from LLM or create new responses. This tailored data is crucial as it will directly address the gaps or inaccuracies revealed during the prompt testing. The data must be representative of the scenarios the LLM struggled with, ensuring that the model learns from relevant and contextual examples.

- Developing Test Cases for Each Training Point: Alongside modifying training data, it’s equally important to develop specific test cases for each training point. These test cases will serve as benchmarks to evaluate the LLM’s learning and adaptation post-fine-tuning. They should be designed to rigorously test the LLM’s ability to handle previously challenging tasks and scenarios, providing a clear measure of the effectiveness of the fine-tuning process.

We only need a few training and testing data sets for each training point. This stage of the development process is crucial for refining the LLM’s abilities. By focusing on each training point with tailored data and test cases, the fine-tuning process becomes more precise and effective, leading to a model that not only performs better in terms of accuracy and response time but also demonstrates enhanced understanding and logical reasoning in line with the specific requirements of the task.

After initial prompt engineering and fine-tuning, it’s vital to re-engage in prompt engineering and continuous testing. This iterative process ensures ongoing improvement and adaptation of the LLM. Abeyon employs this philosophy of constant verification and revaluation to ensure LLMs performance is reliable, accurate and consistent.

- Iterative Prompt Refinement: Post-fine-tuning, revisit your prompts. Refine them based on the insights gained from the fine-tuning process. This could involve simplifying prompts, introducing new formats, or making them more specific.

- Continuous Testing: Regularly test the LLM with new prompts and scenarios. This ongoing testing helps in identifying any lingering issues or new areas where the LLM might struggle. It’s an essential part of ensuring the model remains effective and accurate over time.

- Feedback Loop: Establish a feedback loop where the results from continuous testing inform further prompt engineering and fine-tuning. This loop helps in constantly adapting and refining the LLM to changing requirements and new challenges.

- User Interaction Analysis: If possible, analyze how users interact with the LLM. User interactions can provide valuable insights into how well the LLM is performing and highlight areas for further improvement.

Conclusion

The journey from prompt engineering to fine-tuning in specific-task LLMs is a testament to the evolving nature of machine learning. By focusing on accuracy, consistency, and the quality of data and prompts, these models are fine-tuned to deliver precise results in specialized domains. The future of specific-task LLMs in tasks like NER and classification is bright, promising more tailored and effective solutions that is catered for domain specific solutions.